PCC Database Scaling

Here, we provide an overview of how to horizontally scale a PCC subscriber database by spreading the database across multiple shards on multiple physical servers. It also outlines how multiple tenants can share the same DRE instance. It contains the following sections:

Introduction

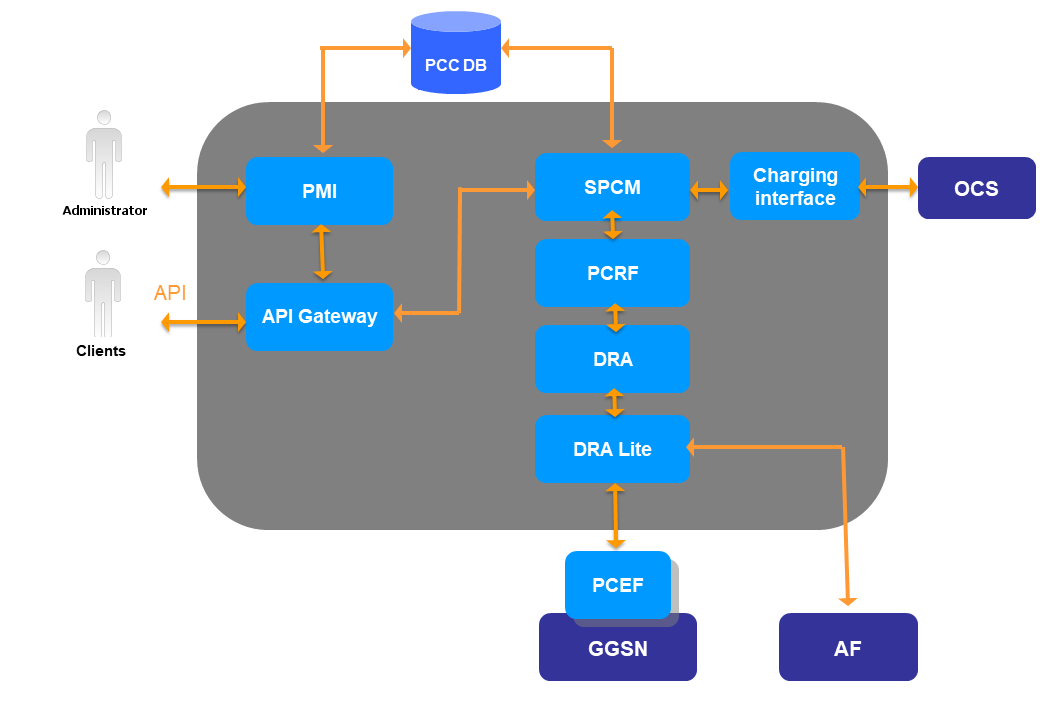

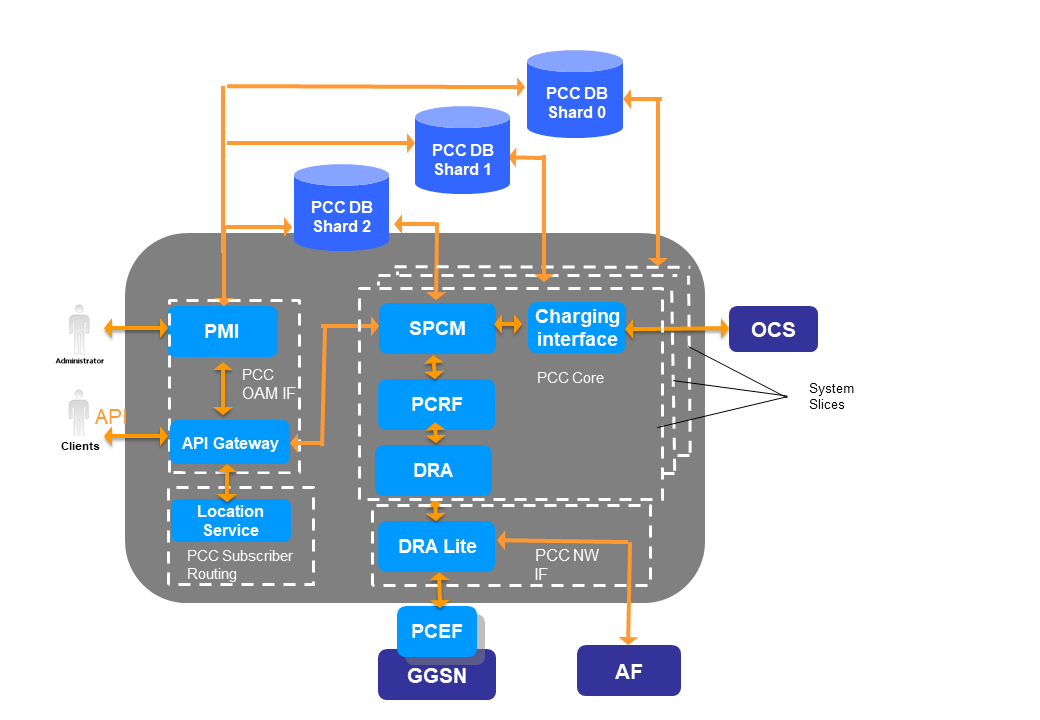

The Tango DRE system, as shown below, traditionally operates with a single PCC subscriber database. For scaling purposes, an alternative approach is also possible whereby the PCC subscriber database is instead divided into multiple shards that can reside across multiple physical servers. Advantages to this sharded approach include the following:

-

an efficient and stable way to manage system scaling

-

an ability to cater for virtualised installations

-

facilitation of partial upgrades

In the traditional DRE system, the API gateway component operates as a single entry point for HTTP API requests from clients. The API gateway also routes requests onwards to backend services, manages encryption (HTTPS termination), and translates protocols. The DRA Lite component operates as a single point of entry for network Diameter traffic.

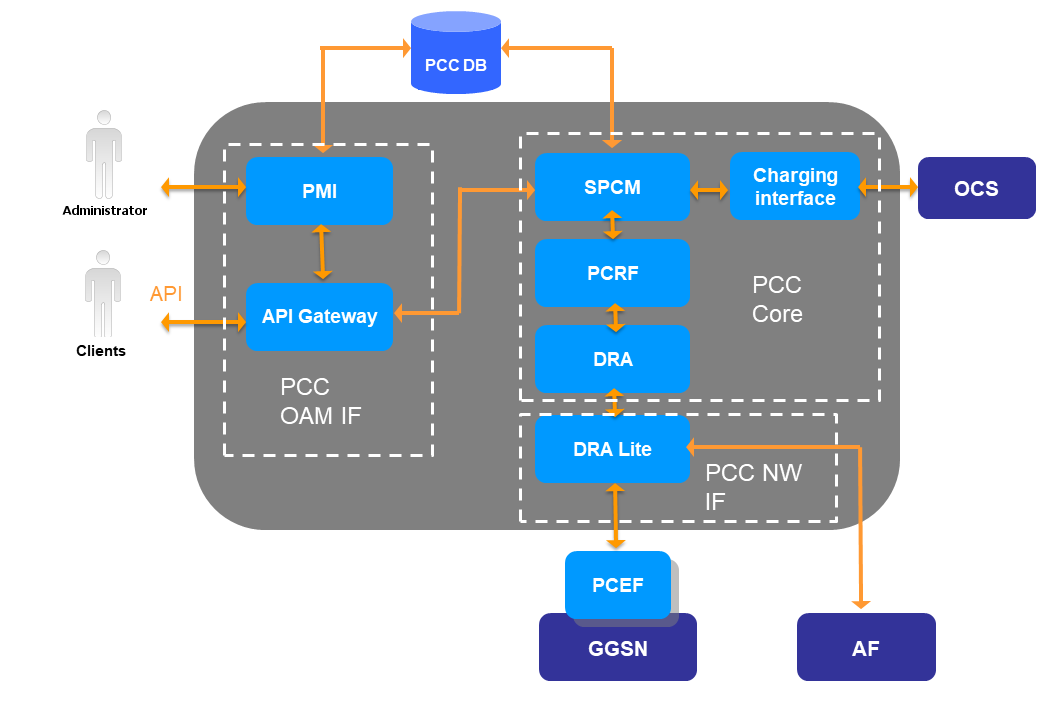

Logical Groupings

Logically, the elements of the DRE system can be grouped as follows:

-

The PMI and API Gateway comprise the PCC OAM interface

-

The SPCM, charging IF, PCRF, and DRA comprise the PCC core

-

The DRA Lite comprises PCC network interface with the PCEF and AF

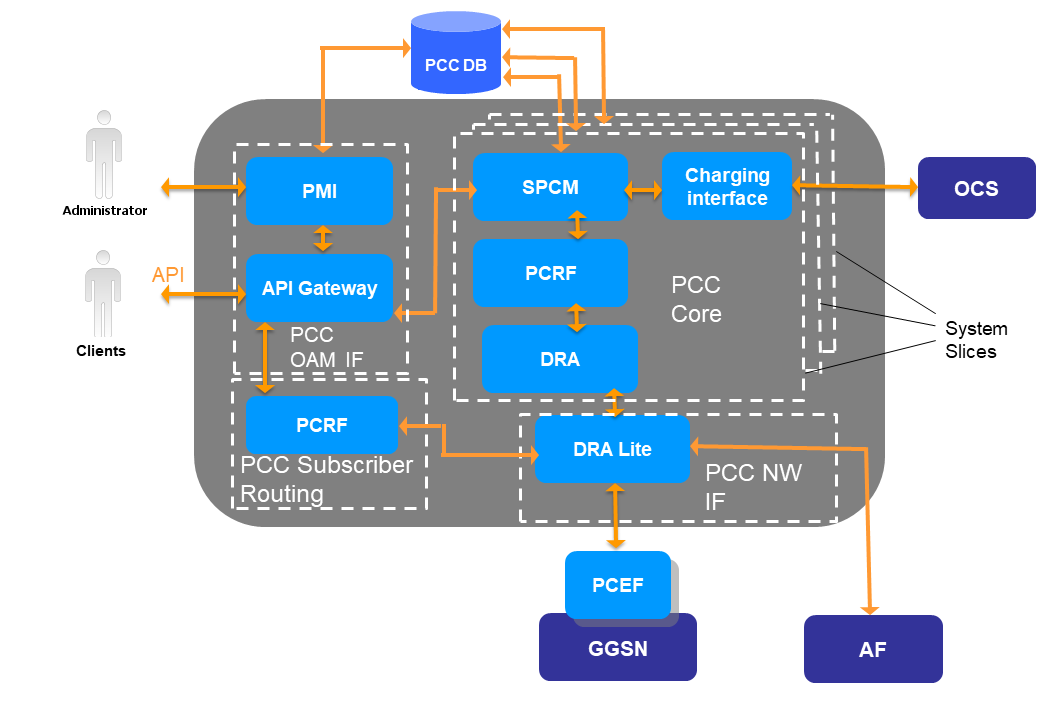

System Slices

The PCC core grouping of components can be replicated and scaled horizontally to meet growing traffic demands. Each PCC core group is referred to as a system slice. In a sliced system each slice can handle API and data session requests for a subset of subscribers.

| A subset of subscribers can be a defined as those belonging to a particular tenant in a multi-tenant arrangement, a range of subscribers, a static list of subscribers, a set of subscribers that match a particular expression such as, for example, those for which the last digit of MSISDN is zero, or any combination thereof. |

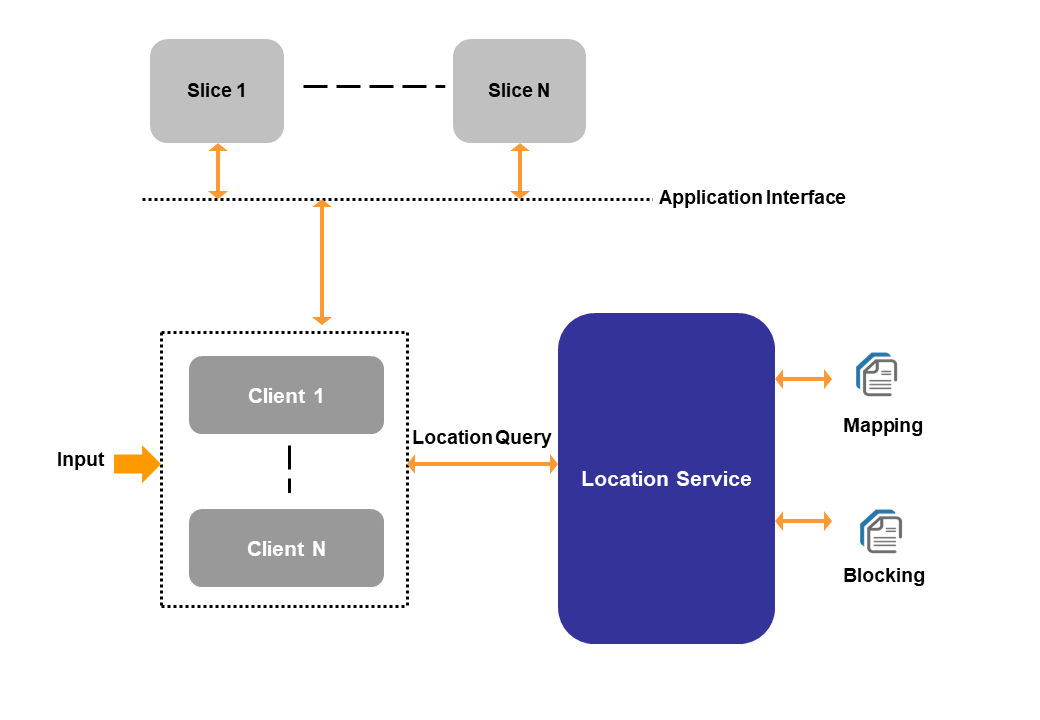

To facilitate routing of incoming requests arriving at the DRE to the appropriate slice, a Location Service component is added to the DRE architecture. An API gateway queries this Location Service to route API requests. Internally an NGINX proxy is used to perform this Location Service lookup.

| NGINX provides SSL termination, security, and API throttling. For some deployments it may be necessary to deploy NGINX in a separate network segment to provide secure access to external clients. For example, NGINX could be deployed alone in the DMZ and all other services placed behind a firewall. |

Routing can be based on MSISDN or IMSI (prefixes or ranges).

The API gateway can also provide blocking capability during upgrade/maintenance windows whereby requests for a specific subscriber subset can be locally terminated (e.g. by responding with HTTP 503 - Service Unavailable).

|

The DRA also queries the Location Service to route Diameter INIT requests to the correct subscriber slice (the correct PCRF or pool of PCRFs). The DRA automatically routes the remaining diameter requests (for example, USAGE, TERMINATE, etc.) to the correct subscriber slice based on the destination host AVP.

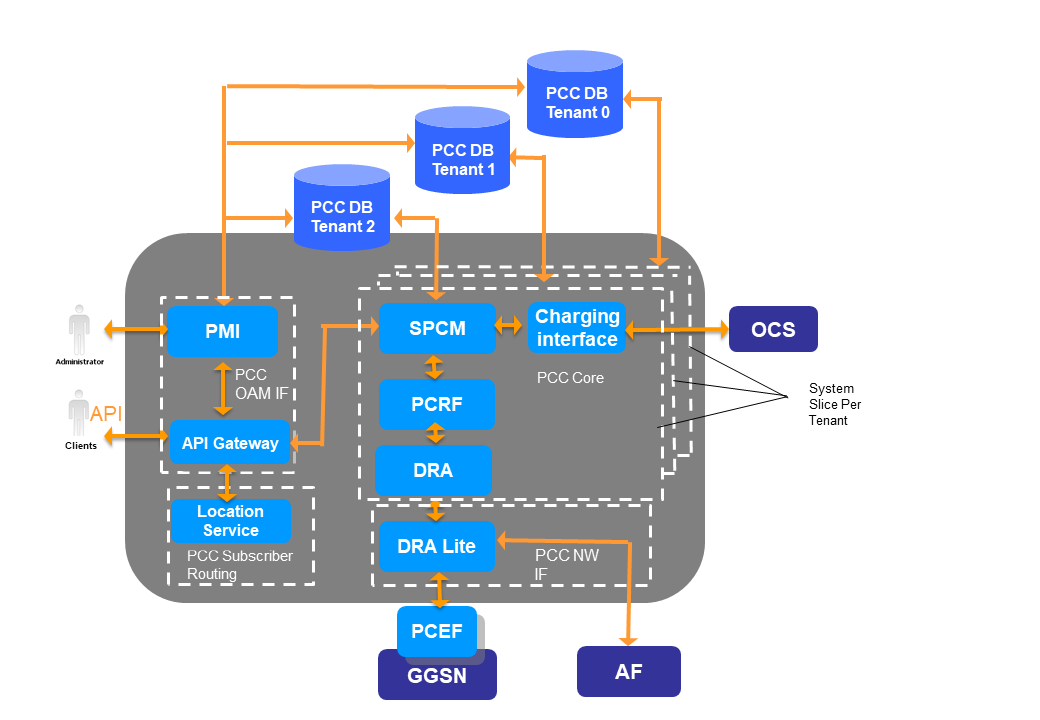

A sliced system is illustrated in the following visual:

When system slices have been set up, you can shard the backend database and thereby horizontally scale a single monolithic subscriber database. Each shard contains a subset of the subscribers. A sharded database system structure is illustrated below:

The Location Service

Where slices are employed, the LS maps incoming requests from clients to the appropriate system slice. Applications query the LS to establish the location of a given subscriber. The LS uses algorithms to determine the following information for any given subscriber:

-

the subscriber status, allowed or blocked, through a check against subscriber lists

-

the location routing key (slice ID) to be returned to the application, using a primary key such MSISDN or IMSI

| Algorithm rules are defined in a local subscriber routing configuration file. An SE method reloads this configuration file as required. For more information, see the iAX™ PCC Administration Guide [Ref. 8]. |

The LS facilitates location queries made through the following three interfaces:

-

SE - SE request sent to the LS and SE response received from the LS

-

HTTP - HTTP GET request sent to the LS and HTTP response received from the LS

-

Diameter Gx - CCR sent to the LS and CCA received from the LS. Diameter Gx supports the TCP and SCTP protocols

Multi-Tenancy

A single DRE platform can be shared by multiple operators or tenants. Each tenant shares the same OAM, NW IF, and subscriber routing layers while one or more system slices are allocated to each tenant. Each tenant has its own PCC core system, PMI login (data access limited to their own data), and discrete subscriber database.

All HTTP and data session traffic is routed to a single pool of SPCMs and PCRFs using a provided Tenant ID header or via a Location Service lookup request. Each tenant has a separate database schema.

| Any given tenant using a multi-tenancy DRE platform can employ multiple slices. |